What's new in Apache Karaf Decanter 2.7.0 ?

Apache Karaf Decanter 2.7.0 release is currently on vote.

I'm a little bit anticipating to the release to do some highlights about what's coming ;)

Karaf Decanter 2.7.0 is an important milestone as it brings new features, especially around big data and cloud.

HDFS and S3 appenders

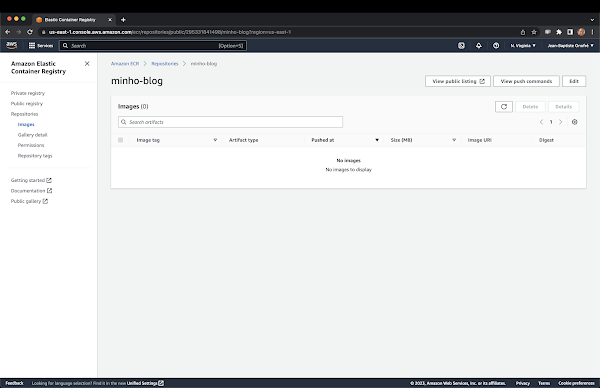

Decanter 2.7.0 brings two new appenders: HDFS and S3 appenders. The HDFS appender is able to store the collected data on HDFS (using CSV format by default). Similary, S3 appender store the collected data as an object into a S3 bucket. Let's illustrate this with a simple use case using S3 appender. First, let's create a S3 bucket on AWS: So, now we have ourdecanter-test S3 bucket ready.

Let's start a Karaf instance with Decanter S3 appender enabled:

Then, we configure S3 appender in etc/org.apache.karaf.decanter.appender.s3.cfg:

###############################

# Decanter Appender S3 Configuration

###############################

# AWS credentials

accessKeyId=...

secretKeyId=...

# AWS Region (optional)

region=eu-west-3

# S3 bucket name

bucket=decanter-test

# Marshaller to use

marshaller.target=(dataFormat=json)

We also install the OSHI collector to get machine details:

karaf@root()> feature:install decanter-collector-oshi

Then, we can see the objets created in the S3 bucket:

Grafana Loki appender

Decanter 2.7.0 also brings another new appender: the Grafana Loki appender. Loki is a horizontally-scalable, highly-available, multi-tenant log aggregation system inspired by Prometheus. It's possible to use Loki in Grafana by adding the corresponding datasource in Grafana. You can download Loki from https://github.com/grafana/loki/releases. First, we create the loki configuration as yaml file:

auth_enabled: false

server:

http_listen_port: 3100

ingester:

lifecycler:

address: 127.0.0.1

ring:

kvstore:

store: inmemory

replication_factor: 1

final_sleep: 0s

chunk_idle_period: 1h # Any chunk not receiving new logs in this time will be flushed

max_chunk_age: 1h # All chunks will be flushed when they hit this age, default is 1h

chunk_target_size: 1048576 # Loki will attempt to build chunks up to 1.5MB, flushing first if chunk_idle_period or max_chunk_age is reached first

chunk_retain_period: 30s # Must be greater than index read cache TTL if using an index cache (Default index read cache TTL is 5m)

max_transfer_retries: 0 # Chunk transfers disabled

schema_config:

configs:

- from: 2020-10-24

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

storage_config:

boltdb_shipper:

active_index_directory: /tmp/loki/boltdb-shipper-active

cache_location: /tmp/loki/boltdb-shipper-cache

cache_ttl: 24h # Can be increased for faster performance over longer query periods, uses more disk space

shared_store: filesystem

filesystem:

directory: /tmp/loki/chunks

compactor:

working_directory: /tmp/loki/boltdb-shipper-compactor

shared_store: filesystem

limits_config:

reject_old_samples: true

reject_old_samples_max_age: 168h

chunk_store_config:

max_look_back_period: 0s

table_manager:

retention_deletes_enabled: false

retention_period: 0s

ruler:

storage:

type: local

local:

directory: /tmp/loki/rules

rule_path: /tmp/loki/rules-temp

alertmanager_url: http://localhost:9093

ring:

kvstore:

store: inmemory

enable_api: true

Then, we start loki using this configuration file:

./loki-darwin-amd64 --config.file=loki-config.yaml

Loki is now available on http://localhost:3100.

The Decanter Loki appender converts all data collected as log message and store into Loki (sent via the Loki REST API).

We just have to install the Decanter Loki appender and configure the Loki location in etc/org.apache.karaf.decanter.appender.loki.cfg:

karaf@root()> feature:install decanter-appender-loki

######################################

# Decanter Loki Appender Configuration

######################################

# Loki push API location

loki.url=http://localhost:3100/loki/api/v1/push

# Loki tenant

loki.tenant=decanter

# Loki basic authentication

#loki.username=

#loki.password=

# Marshaller

#marshaller.target=(dataFormat=raw)

Apache Druid collector

Decanter 2.7.0 also brings new collectors. A new collector is the Apache Druid collector. I already talked about Druid in a previous blog post: https://nanthrax.blogspot.com/2021/01/complete-metrics-collections-and.html. The Decanter Druid collector periodically (scheduled) executes queries on the Druid API. We can install the Druid collector:

karaf@root()> feature:install decanter-collector-druid

Then, you can configure the location of the Druid API and the queries you want to execute in etc/org.apache.karaf.decanter.collector.druid.cfg:

#

# Druid broker query API location

#

druid.broker.location=http://localhost:8888/druid/v2/sql/

# Druid queries set, using syntax: query.id

query.foo=select sum_operatingSystem_threadCount from decanter

The queries are prefixed with query.. For instance, you can configure multiple queries using:

query.foo=select count(*) from decanter

query.bar=select bar from decanter

OpenStack collector

Another new collector is the OpenStack collector. The Decanter OpenStack collector periodically (scheduled) requests OpenStack APIs to get metrics about OpenStack services. We can install the OpenStack collector:

karaf@root()> feature:install decanter-collector-openstack

This feature also add etc/org.apache.karaf.decanter.collector.openstack.cfg configuration file where you can define which services you want to request and the location of these services:

#

# Decanter Openstack collector

#

# Openstack services API locations

#

openstack.identity=http://localhost/identity

openstack.project=xxxxxxx

openstack.username=admin

openstack.password=secret

openstack.domain=default

openstack.compute.enabled=true

openstack.compute=http://localhost/compute/v2.1

openstack.block.storage.enabled=true

openstack.block.storage=http://localhost/volume/v3

openstack.image.enabled=true

openstack.image=http://localhost/image

openstack.metric.enabled=true

openstack.metric=http://localhost/metric

# Unmarshaller to use

unmarshaller.target=(dataFormat=json)

This OpenStack Collector could be improved depending of the user comments ;)

Refactoring of Apache Camel collectors and Camel 3.x upgrade

Karaf Decanter now fully supports Camel 3.x (and still work with Camel 2.x). Decanter Camel collector actually supports two collectors:- Camel interceptor strategy (aka tracer)

- Camel event notifier

DecanterInterceptorStrategy:

DecanterInterceptStrategy decanterCamelTracer = new DecanterInterceptStrategy();

decanterCamelTracer.setDispatcher(eventAdmin);

camelContext.addInterceptStrategy(decanterCamelTracer);

Comments

Post a Comment