Apache Karaf Decanter 2.3.0, the new alerting service

Apache Karaf Decanter 2.3.0 will be released soon. This release brings lot of fixes, improvements and new features.

In this blog post, we will focus on one major refactoring done in this version: the alerting service.

Goodbye checker, welcome alerting service

Before Karaf Decanter 2.3.0, the alert rules where defined in a configuration file named etc/org.apache.karaf.decanter.alerting.checker.cfg.

The configuration were simple and looked like. For instance:

message.warn=match:.*foobar.*

But the checker has three limitations:

- it’s not possible to define a check on several attributes at same time. For instance, it’s not possible to have a rule with something like

if message == 'foo' and other == 'bar'. - it’s not possible to have “time scoped” rule. For instance, I want to have an alert only if a counter is great than a value for x minutes.

- A bit related to previous point, the recoverable alerts are not perfect in the checker. It should be a configuration of the alert rule depending what the user wants.

In regards of those limitations, we completely refactored the checker: here’s the new alerting service, replacing the “old” checker.

The alerting service is configured in a configuration file named etc/org.apache.karaf.decanter.alerting.service.cfg.

“Regular” alerts

Now, each rule has a name and the configuration in defined in JSON:

rule.myfirstalert="{'condition':'message:*','level':'WARN'}"To test this, we can install the Decanter log alerter and the rest-servlet collector:

karaf@root()> feature:install decanter-alerting-logkaraf@root()> feature:install decanter-collector-rest-servlet

Now, using curl, we can send the following message on the rest collector:

curl -X POST -H "Content-Type: application/json" -d "{\"message\":\"test\"}" http://localhost:8181/decanter/collectThen, we can see the following in the log:

17:59:05.273 WARN [EventAdminAsyncThread #22] DECANTER ALERT: condition message:*17:59:05.273 WARN [EventAdminAsyncThread #22] hostName:LT-C02R90TRG8WMalertUUID:b7bb6f5f-b3fd-48bb-bc2b-0f9d1450f21ealertPattern:message:*component.name:org.apache.karaf.decanter.collector.rest.servletalertLevel:WARNfelix.fileinstall.filename:file:/Users/jbonofre/Downloads/apache-karaf-4.2.8/etc/org.apache.karaf.decanter.collector.rest.servlet.cfgunmarshaller.target:(dataFormat=json)message:testtype:restservletdecanter.collector.name:rest-servletservice.pid:org.apache.karaf.decanter.collector.rest.servletcomponent.id:14alertBackToNormal:falsepayload:{"message":"test"}karafName:rootalias:/decanter/collectalertTimestamp:1585328345257hostAddress:192.168.0.11timestamp:1585328345257event.topics:decanter/alert/WARN“Recoverable” alerts

We can now add a second rule defined as recoverable. Let’s add the following in etc/org.apache.karaf.decanter.alerting.service.cfg:

rule.mysecondalert="{'condition':'counter:[50 TO 100]','level':'WARN','recoverable':true}"Now, we send the following message using curl:

curl -X POST -H "Content-Type: application/json" -d "{\"counter\":60}" http://localhost:8181/decanter/collectWe can see in the log:

18:15:48.995 WARN [EventAdminAsyncThread #15] DECANTER ALERT: condition counter:[50 TO 100]18:15:48.995 WARN [EventAdminAsyncThread #15] hostName:LT-C02R90TRG8WMalertUUID:a19c9715-6ff9-46e5-b45c-0f1f1abd7b75alertPattern:counter:[50 TO 100]component.name:org.apache.karaf.decanter.collector.rest.servletalertLevel:WARNfelix.fileinstall.filename:file:/Users/jbonofre/Downloads/apache-karaf-4.2.8/etc/org.apache.karaf.decanter.collector.rest.servlet.cfgunmarshaller.target:(dataFormat=json)counter:60type:restservletdecanter.collector.name:rest-servletservice.pid:org.apache.karaf.decanter.collector.rest.servletcomponent.id:14alertBackToNormal:falsepayload:{"counter":60}karafName:rootalias:/decanter/collectalertTimestamp:1585329348945hostAddress:192.168.0.11timestamp:1585329348945event.topics:decanter/alert/WARNNow, we send another message using curl:

curl -X POST -H "Content-Type: application/json" -d "{\"counter\":70}" http://localhost:8181/decanter/collectWe don’t have new message in the log: it’s normal as the alert is “active”. We can see the “active” alerts using the decanter:alerts command:

karaf@root()> decanter:alerts=========================================| hostName = LT-C02R90TRG8WM| alertUUID = a19c9715-6ff9-46e5-b45c-0f1f1abd7b75| component.name = org.apache.karaf.decanter.collector.rest.servlet| felix.fileinstall.filename = file:/Users/jbonofre/Downloads/apache-karaf-4.2.8/etc/org.apache.karaf.decanter.collector.rest.servlet.cfg| unmarshaller.target = (dataFormat=json)| counter = 60| type = restservlet| alertRule = mysecondalert| decanter.collector.name = rest-servlet| service.pid = org.apache.karaf.decanter.collector.rest.servlet| component.id = 14| event.topics = decanter/collect/rest-servlet| payload = {"counter":60}| karafName = root| alias = /decanter/collect| alertTimestamp = 1585329348945| hostAddress = 192.168.0.11| timestamp = 1585329348945So, we can see our “on going” alert.

Now, let’s send a new message with counter outside of the range from the alert rule condition, meaning that the alert is “recovered”:

curl -X POST -H "Content-Type: application/json" -d "{\"counter\":10}" http://localhost:8181/decanter/collectThen, we can see in the log:

18:19:38.529 INFO [EventAdminAsyncThread #21] DECANTER ALERT BACK TO NORMAL: condition counter:[50 TO 100] recover18:19:38.530 INFO [EventAdminAsyncThread #21] hostName:LT-C02R90TRG8WMalertUUID:8a6d9c58-5acb-4774-9d09-dd2d8fd42422alertPattern:counter:[50 TO 100]component.name:org.apache.karaf.decanter.collector.rest.servletalertLevel:WARNfelix.fileinstall.filename:file:/Users/jbonofre/Downloads/apache-karaf-4.2.8/etc/org.apache.karaf.decanter.collector.rest.servlet.cfgunmarshaller.target:(dataFormat=json)counter:10type:restservletdecanter.collector.name:rest-servletservice.pid:org.apache.karaf.decanter.collector.rest.servletcomponent.id:14alertBackToNormal:truepayload:{"counter":10}karafName:rootalias:/decanter/collectalertTimestamp:1585329578512hostAddress:192.168.0.11timestamp:1585329578512event.topics:decanter/alert/WARNSo, we can see the alert “back to normal” and the alert is not listed anymore by the decanter:alerts command:

karaf@root()> decanter:alerts

“Time period” alerts

Decanter alerting service also supports a new kind of alerts: the “time period” alerts. The alert is actually sent only if it has been “active” during a time frame.

The idea is to avoid “false positive”. For instance, thread count can raise up to 200 for short period of time, and it’s not a problem. However, if thread count is greater than 200 for more than 2 minutes, then we consider it’s alert.

Let’s create a “time period” alert in etc/org.apache.karaf.decanter.alerting.service.cfg configuration file:

rule.third="{'condition':'threadCount:[200 TO *]','level':'ERROR','period':'2MINUTES'}"Now, we send the following message using curl:

curl -X POST -H "Content-Type: application/json" -d "{\"threadCount\":210}" http://localhost:8181/decanter/collectWe don’t see anything in the log, but we can see a “on going” alert using decanter:alerts command:

karaf@root()> decanter:alerts=========================================| hostName = LT-C02R90TRG8WM| alertUUID = bb2073e1-fa73-4ab2-b445-a7002153384f| component.name = org.apache.karaf.decanter.collector.rest.servlet| threadCount = 210| felix.fileinstall.filename = file:/Users/jbonofre/Downloads/apache-karaf-4.2.8/etc/org.apache.karaf.decanter.collector.rest.servlet.cfg| unmarshaller.target = (dataFormat=json)| type = restservlet| alertRule = third| decanter.collector.name = rest-servlet| service.pid = org.apache.karaf.decanter.collector.rest.servlet| component.id = 14| event.topics = decanter/collect/rest-servlet| payload = {"threadCount":210}| karafName = root| alias = /decanter/collect| alertTimestamp = 1585330282607| hostAddress = 192.168.0.11| timestamp = 1585330282607Now, let’s wait 2 minutes and send a new message:

curl -X POST -H "Content-Type: application/json" -d "{\"threadCount\":220}" http://localhost:8181/decanter/collectNow, we see the alert in the log:

18:34:05.167 ERROR [EventAdminAsyncThread #23] DECANTER ALERT: condition threadCount:[200 TO *]18:34:05.168 ERROR [EventAdminAsyncThread #23] hostName:LT-C02R90TRG8WMalertUUID:bb2073e1-fa73-4ab2-b445-a7002153384falertPattern:threadCount:[200 TO *]component.name:org.apache.karaf.decanter.collector.rest.servletalertLevel:ERRORthreadCount:210felix.fileinstall.filename:file:/Users/jbonofre/Downloads/apache-karaf-4.2.8/etc/org.apache.karaf.decanter.collector.rest.servlet.cfgunmarshaller.target:(dataFormat=json)type:restservletalertRule:thirddecanter.collector.name:rest-servletservice.pid:org.apache.karaf.decanter.collector.rest.servletcomponent.id:14alertBackToNormal:falsepayload:{"threadCount":210}karafName:rootalias:/decanter/collectalertTimestamp:1585330282607hostAddress:192.168.0.11timestamp:1585330282607event.topics:decanter/alert/ERRORApache Lucene behind the hood

We saw in previous section the alerts supported by the new alerting service. Behind the hood, Decanter alerting service uses Apache Lucene.

Decanter alerting service stores the alerts in Lucene index as document. By default, Decanter alerting service storage is ${KARAF_DATA}/decanter/alerting. It’s where we can find the Lucene index filesystem.

The alert rule condition are directly Apache Lucene queries. You can find details about Lucene query syntax here https://lucene.apache.org/core/8_5_0/queryparser/org/apache/lucene/queryparser/classic/package-summary.html#package.description.

It gives to users very powerful condition: multiple properties, logical operators, negation, range, …

Decanter alerting service automatically keeps the index up to date and clean, removing the alert documents when not useful.

In any case, you can always completely clean all alerts and all documents using the decanter:alerts-cleanup command.

Alerting service in action

Let’s take a concrete use case using Decanter alerting service. Here, we will use the mail alerter (to received an email when we have an alert) and the JMX collector (to check the running Karaf metrics).

Let’s install the JMX collector and the email alerter:

karaf@root()> feature:install decanter-collector-jmxkaraf@root()> feature:install decanter-alerting-email

First, let’s configure the email alerter in etc/org.apache.karaf.decanter.alerting.email.cfg.

Then, we configure an alert rule on ThreadCount in etc/org.apache.karaf.decanter.alerting.service.cfg configuration file:

rule.threadCount="{'condition':'ThreadCount:[20 TO *]','level':'ERROR'}"NB: 20 is a very low value, it’s just the demo 😉 In my Apache Karaf, I had about 90 threads used.

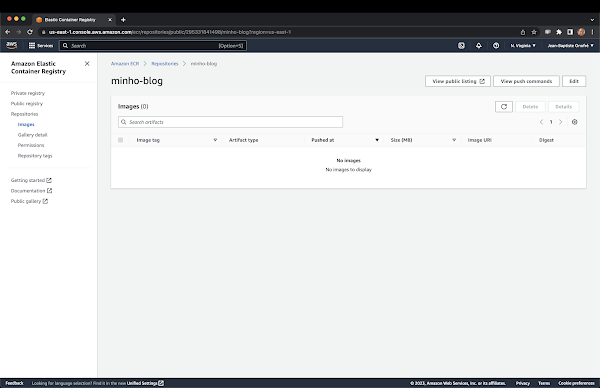

Then, very soon, you should receive an email looking like:

What’s next ?

You can find the Pull Request with the alerting service refactoring here: https://github.com/apache/karaf-decanter/pull/144.

I think that the new Decanter alerting service is a great improvement on the alert layer compared to what we had before. We can imagine new use cases and features we can implement easily on this base.

The three kind of alerts I implements are the most used (I think). If you have other ideas or use cases in mind, feel free to discuss on the mailing list, create Jira or ping me directly.

Comments

Post a Comment