Apache Karaf, Cellar, Camel, ActiveMQ monitoring with ELK (ElasticSearch, Logstash, and Kibana)

Apache Karaf, Cellar, Camel, and ActiveMQ provides a lot of information via JMX.

More over, another very useful source of information is in the log files.

If these two sources are very interesting, for a “real life” monitoring, we need some additional features:

- The JMX information and log messages should be stored in order to be requested later and history. For instance, using jconsole, you can request all the JMX attributes to get the number, but these numbers have to be store somewhere. It’s quite the same for the log. Most of the time, you define a log file rotation, or you periodically cleanup the logs. So the log messages should be store as well to be requested later.

- Numbers are good, graphics are even better. Once the JMX “numbers” are stored somewhere, a good feature is to use these numbers to create some charts. And also, we can define some kind of SLA: at some point, if a number is not “acceptable” for instance greater than a “watermark” value), we should raise a alert.

- For high availability and scalability, most of production systems use multiple Karaf instances (synchronize with Cellar for instance). It means that the log files are spread on different machines. In that case, it’s really helpful to “centralize” the log messages.

Of course, there are already open source solutions (zabbix, nagios, etc) or commercial solutions (dynatrace, etc) to cover these needs.

In this blog, I just introduce a possible solution leveraging “big data” tools: we will see how to use the ELK (Elasticsearch, Logstash, and Kibana) solution.

Toplogy

For this example, let say we have to following architecture:

- node1 is a machine hosting a Karaf container with a set of Camel routes.

- node2 is a machine hosting a Karaf container with another set of Camel routes.

- node3 is a machine hosting a ActiveMQ broker (used by the Camel routes from node1 and node2).

- monitor is a machine hosting the monitoring platform.

Local to node1, node2, and node3, we install and configure logstash with both file and JMX input plugins. This logstash will get the log messages and pool JMX MBeans attributes, and send to a “central” Redis server (using the redis output plugin).

On monitor, we install:

- redis server to receive the messages and events coming from logstash installed on node1, node2, and node3

- elasticsearch to store the messages and events

- a first logstash acting as an indexer to take the messages/events from redis and store into elasticsearch (including the update of indexes, etc)a second logstash providing the kibana web console

Redis and Elasticsearch

Redis

Redis is a key-value store. But it also may acts as a broker to receive the messages/events from the different logstash (node1, node2, and node3).

For the demo, I use Redis 2.8.7 (that you can download from http://download.redis.io/releases/redis-2.8.7.tar.gz.

We uncompress the redis tarball in the /opt/monitor folder:

cp redis-2.8.7.tar.gz /opt/monitortar zxvf redis-2.8.7.tar.gz

Now, we have to compile Redis server on the machine. To do so, we have to execute make in the Redis src folder:

cd redis-2.8.7/srcmake

NB: this step requires make and gcc installed on the machine.

make created a redis-server binary in the src folder. It’s the binary that we use to start Redis:

./redis-server --loglevel verbose[12130] 16 Mar 21:04:28.387 # Unable to set the max number of files limit to 10032 (Operation not permitted), setting the max clients configuration to 3984. _._ _.-``__ ''-._ _.-`` `. `_. ''-._ Redis 2.8.7 (00000000/0) 64 bit .-`` .-```. ```\/ _.,_ ''-._ ( ' , .-` | `, ) Running in stand alone mode |`-._`-...-` __...-.``-._|'` _.-'| Port: 6379 | `-._ `._ / _.-' | PID: 12130 `-._ `-._ `-./ _.-' _.-' |`-._`-._ `-.__.-' _.-'_.-'| | `-._`-._ _.-'_.-' | http://redis.io `-._ `-._`-.__.-'_.-' _.-' |`-._`-._ `-.__.-' _.-'_.-'| | `-._`-._ _.-'_.-' | `-._ `-._`-.__.-'_.-' _.-' `-._ `-.__.-' _.-' `-._ _.-' `-.__.-' [12130] 16 Mar 21:04:28.388 # Server started, Redis version 2.8.7[12130] 16 Mar 21:04:28.388 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.[12130] 16 Mar 21:04:28.388 * The server is now ready to accept connections on port 6379[12130] 16 Mar 21:04:28.389 - 0 clients connected (0 slaves), 443376 bytes in use

The redis server is now ready to accept connections coming from the “remote” logstash.

Elasticsearch

We use elasticsearch as storage backend for all messages and events. For this demo, I use elasticsearch 1.0.1, that you can download from https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.0.1.tar.gz.

We uncompress the elasticsearch tarball in the /opt/monitor folder:

cp elasticsearch-1.0.1.tar.gz /opt/monitoretar zxvf elasticsearch-1.0.1.tar.gz

We start elasticsearch with the bin/elasticsearch binary (the default configuration is OK):

cd elasticsearch-1.0.1bin/elasticsearch[2014-03-16 21:16:13,783][INFO ][node ] [Solarr] version[1.0.1], pid[12466], build[5c03844/2014-02-25T15:52:53Z][2014-03-16 21:16:13,783][INFO ][node ] [Solarr] initializing ...[2014-03-16 21:16:13,786][INFO ][plugins ] [Solarr] loaded [], sites [][2014-03-16 21:16:15,763][INFO ][node ] [Solarr] initialized[2014-03-16 21:16:15,764][INFO ][node ] [Solarr] starting ...[2014-03-16 21:16:15,902][INFO ][transport ] [Solarr] bound_address {inet[/0:0:0:0:0:0:0:0:9300]}, publish_address {inet[/192.168.134.11:9300]}[2014-03-16 21:16:18,990][INFO ][cluster.service ] [Solarr] new_master [Solarr][V9GO0DiaT4SFmRmxgwYv0A][vostro][inet[/192.168.134.11:9300]], reason: zen-disco-join (elected_as_master)[2014-03-16 21:16:19,010][INFO ][discovery ] [Solarr] elasticsearch/V9GO0DiaT4SFmRmxgwYv0A[2014-03-16 21:16:19,021][INFO ][http ] [Solarr] bound_address {inet[/0:0:0:0:0:0:0:0:9200]}, publish_address {inet[/192.168.134.11:9200]}[2014-03-16 21:16:19,072][INFO ][gateway ] [Solarr] recovered [0] indices into cluster_state[2014-03-16 21:16:19,072][INFO ][node ] [Solarr] startedLogstash

Logstash is a tool for managing events and logs.

It works with a chain of input, filter, output.

On node1, node2, and node3, we will setup logstash with:

- a file input plugin to read the log files

- a jmx input plugin to read the different MBeans attributes

- a redis output to send the messages and events to the monitor machine.

For this blog, I use logstash 1.4 SNAPSHOT with a contrib that I did. You can find my modified plugin on my github: https://github.com/jbonofre/logstash-contrib.

The first thing is to checkout latest logstach codebase and build it:

git clone https://github.com/elasticsearch/logstash/cd logstashmake tarball

It will create the logstash distribution tarball in the build folder.

We can install logstash in a folder (for instance /opt/monitor/logstash):

mkdir /opt/monitorcp build/logstash-1.4.0.rc1.tar.gz /opt/monitorcd /opt/monitortar zxvf logstash-1.4.0.rc1.tar.gzrm logstash-1.4.0.rc1.tar.gz

JMX is not a “standard” logstash plugin. It’s a plugin from logstash-contrib project. As I modified the logstash JMX plugin (to work “smoothly” with Karaf MBeanServer), waiting that my pull request will be integrated in logstash-contrib (I hope ;)), you have to clone my github fork:

git clone https://github.com/jbonofre/logstash-contrib/cd logstash-contribmake tarball

We can add the contrib plugins into our logstash installation (in /opt/monitor/logstash-1.4.0.rc1 folder):

cd buildtar zxvf logstash-contrib-1.4.0.beta2.tar.gzcd logstash-contrib-1.4.0.beta2cp -r * /opt/monitor/logstash-1.4.0.rc1

Our logstash installation is now ready, including the logstash-contrib plugins.

It means that on node1, node2, node3 and monitor, you should have the /opt/monitor/logstash-1.4.0.rc1 folder with the installation (you can use scp or rsync to install logstash on the machines).

Indexer

On monitor machine, we have a logstash instance acting as an indexer: it gets the messages from redis and stores in elasticsearch.

We create the /opt/monitor/logstash-1.4.0.rc1/conf/indexer.conf file containing:

input { redis { host => "localhost" data_type => "list" key => "logstash" codec => json }}output { elasticsearch { host => "localhost" }}We can start logstash using this configuration file:

cd /opt/monitor/logstash-1.4.0.rc1bin/logstash -f conf/indexer.conf

Collector

On node1, node2, and node3, logstash will act as a collector:

- the file input plugin will read the messages from the log files (you can configure multiple log files)

- the jmx input plugin will periodically pool MBean attributes

Both will send messages to the redis server using the redis output plugin.

We create a folder /opt/monitor/logstash-1.4.0.rc1/conf. It’s where we store the logstash configuration. In this folder, we create a collector.conf file.

For node1 and node2 (both hosting a karaf container with camel routes), the collector.conf file contains:

input { file { type => "log" path => ["/opt/karaf/data/log/*.log"] } jmx { path => "/opt/monitor/logstash-1.4.0.rc1/conf/jmx" polling_frequency => 10 type => "jmx" nb_thread => 4 }}output { redis { host => "monitor" data_type => "list" key => "logstash" }}On node3 (hosting an ActiveMQ broker), the collector.conf is the same, just the location of the log file is different:

input { file { type => "log" path => ["/opt/activemq/data/*.log"] } jmx { path => "/opt/monitor/logstash-1.4.0.rc1/conf/jmx" polling_frequency => 10 type => "jmx" nb_thread => 4 }}output { redis { host => "monitor" data_type => "list" key => "logstash" }}The redis output plugin send the messages/events to the redis server located on “monitor” machine.

These messages and events come from two input plugins:

- the file input plugin takes the path of the log file (using glob)

- the jmx input plugin takes a folder. This folder contains json file (see later) with the MBeans queries. The plugin executes the queries every 10 seconds (polling_frequency).

So, the jmx input plugin reads all files located in the /opt/monitor/logstash-1.4.0.rc1/conf/jmx folder.

On node1 and node2 (again hosting a karaf container with camel routes), for instance, we want to monitor the number of thread on the Karaf instance (using the thread MBean), and a route named “route1” (using the Camel route MBean).

We specify this in /opt/monitor/logstash-1.4.0.rc1/conf/jmx/karaf file:

{ "host" : "localhost", "port" : 1099, "url" : "service:jmx:rmi:///jndi/rmi://localhost:1099/karaf-root", "username" : "karaf", "password" : "karaf", "alias" : "node1", "queries" : [ { "object_name" : "java.lang:type=Threading", "object_alias" : "Threading" }, { "object_name" : "org.apache.camel:context=*,type=routes,name=\"route1\"", "object_alias" : "Route1" } ]}On node3, we will have a different JMX configuration file (for instance /opt/monitor/logstash-1.4.0.rc1/conf/jmx/activemq) containing the ActiveMQ MBeans that we want to query.

Now, we can start the logstash “collector”:

cd /opt/monitor/logstash-1.4.0.rc1bin/logstash -f conf/collector.conf

We can see the clients connected in the redis log:

[12130] 17 Mar 14:33:27.041 - Accepted 127.0.0.1:46598[12130] 17 Mar 14:33:31.267 - 2 clients connected (0 slaves), 484992 bytes in use

and the data populated in the elasticsearch log:

[2014-03-17 14:21:59,539][INFO ][cluster.service ] [Solarr] added {[logstash-vostro-32001-2010][dhXgnFLwTHmbdsawAEJbyg][vostro][inet[/192.168.134.11:9301]]{client=true, data=false},}, reason: zen-disco-receive(join from node[[logstash-vostro-32001-2010][dhXgnFLwTHmbdsawAEJbyg][vostro][inet[/192.168.134.11:9301]]{client=true, data=false}])[2014-03-17 14:30:59,584][INFO ][cluster.metadata ] [Solarr] [logstash-2014.03.17] creating index, cause [auto(bulk api)], shards [5]/[1], mappings [_default_][2014-03-17 14:31:00,665][INFO ][cluster.metadata ] [Solarr] [logstash-2014.03.17] update_mapping [log] (dynamic)[2014-03-17 14:33:28,247][INFO ][cluster.metadata ] [Solarr] [logstash-2014.03.17] update_mapping [jmx] (dynamic)Now, we have JMX data and log messages from different containers, brokers, etc stored in one centralized place (the monitor machine).

We can now add a web application to read the data and create charts using the data.

Kibana

Kibana is the web application provided with logstash. The default configuration use elasticsearch default port. So, we just have to start Kibana on the monitor machine:

cd /opt/monitor/logstash-1.4.0.rc1bin/logstash-web

We access to Kibana on http://monitor:9292.

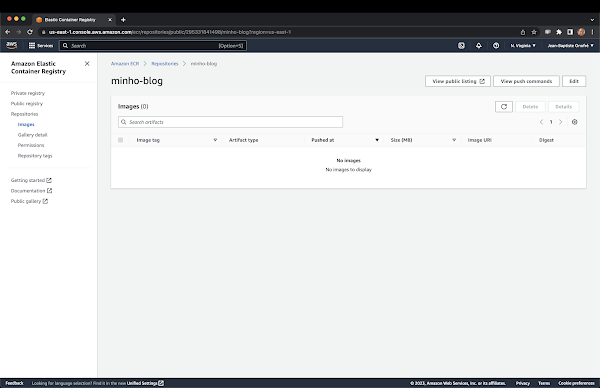

On the welcome page, we click on the “Logstash dashboard” link, and we arrive on a console looking like:

It’s time to configure Kibana.

We remove the default histogram, to add a custom one to chart the thread count.

First, we create a query to isolate the thread count for node1. Kibana uses the Apache Lucene query syntax.

Our query is here very simple: metric_path:"node1.Threading.ThreadCount".

Now, we can create a histogram using this query, getting the metric_value_number:

Now, we want to chart the lastProcessingTime on the Camel route (to see for instance if the route takes more time at some point).

We create a new query to isolate the route1 lastProcessingTime on node1: metric_path:"node1.Route1.LastProcessingTime".

We can now create a histogram using this query, getting the metric_value_number:

For the demo, we can create a histogram chart to display the exchanges completed and failed for route1 on node1. We create two queries:

- metric_path:”node1.Route1.ExchangesFailed”

- metric_path:”node1.Route1.ExchangesCompleted”

We create a new chart in the same row:

We cleanup a bit the events panel. We create a query to display only the log messages (not the JMX queries): type:"log".

We configure the log event panel to change the name and use the log query:

We have now a kibana console looking like:

With this very simple kibana configuration, we have:

– a chart of the thread count on node1

– a chart of the last processing time for route1 (on node1)

– a chart of the exchanges (failed/completed) for route1 (on node1)

– a view of all logs messages

You can now play with Kibana, add a lot of new charts leveraging all information that you have into elasticsearch (both log messages and JMX data).

Next

I’m working on some new Karaf, Cellar, ActiveMQ, Camel features providing “native” and “enhanced” support for logstash. The purpose is to just type feature:install monitoring to get:

- jmx:* commands in Karaf

- broadcast of event in elasticsearch

- integration of redis, elasticsearch, logstash in Karaf (to avoid to install it “externally” from Karaf) and provide ready to use configuration (pre-configured logstash jmx input plugin, pre-configured kibana console/charts, …).

If you have other ideas to enhance and improve monitoring in Karaf, Cellar, Camel, ActiveMQ, don’t hesitate to propose on the mailing lists ! Any idea is welcome.

Comments

Post a Comment